Tarun Sharma

INDIC DIALECT: A Multi Task Benchmark to Evaluate and Translate in Indian Language Dialects

Jan 15, 2026Abstract:Recent NLP advances focus primarily on standardized languages, leaving most low-resource dialects under-served especially in Indian scenarios. In India, the issue is particularly important: despite Hindi being the third most spoken language globally (over 600 million speakers), its numerous dialects remain underrepresented. The situation is similar for Odia, which has around 45 million speakers. While some datasets exist which contain standard Hindi and Odia languages, their regional dialects have almost no web presence. We introduce INDIC-DIALECT, a human-curated parallel corpus of 13k sentence pairs spanning 11 dialects and 2 languages: Hindi and Odia. Using this corpus, we construct a multi-task benchmark with three tasks: dialect classification, multiple-choice question (MCQ) answering, and machine translation (MT). Our experiments show that LLMs like GPT-4o and Gemini 2.5 perform poorly on the classification task. While fine-tuned transformer based models pretrained on Indian languages substantially improve performance e.g., improving F1 from 19.6\% to 89.8\% on dialect classification. For dialect to language translation, we find that hybrid AI model achieves highest BLEU score of 61.32 compared to the baseline score of 23.36. Interestingly, due to complexity in generating dialect sentences, we observe that for language to dialect translation the ``rule-based followed by AI" approach achieves best BLEU score of 48.44 compared to the baseline score of 27.59. INDIC-DIALECT thus is a new benchmark for dialect-aware Indic NLP, and we plan to release it as open source to support further work on low-resource Indian dialects.

Teaching Computer Vision for Ecology

Jan 05, 2023

Abstract:Computer vision can accelerate ecology research by automating the analysis of raw imagery from sensors like camera traps, drones, and satellites. However, computer vision is an emerging discipline that is rarely taught to ecologists. This work discusses our experience teaching a diverse group of ecologists to prototype and evaluate computer vision systems in the context of an intensive hands-on summer workshop. We explain the workshop structure, discuss common challenges, and propose best practices. This document is intended for computer scientists who teach computer vision across disciplines, but it may also be useful to ecologists or other domain experts who are learning to use computer vision themselves.

Epidemic Control Modeling using Parsimonious Models and Markov Decision Processes

Jun 23, 2022

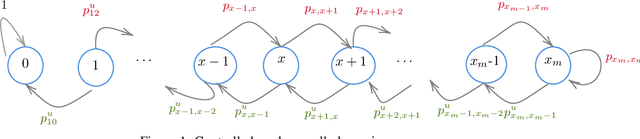

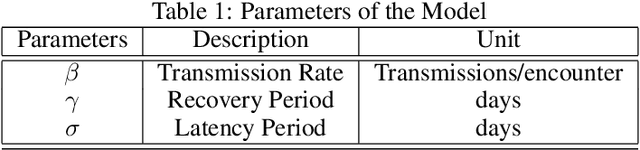

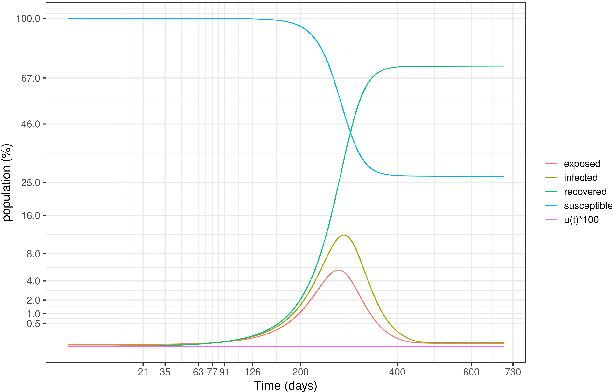

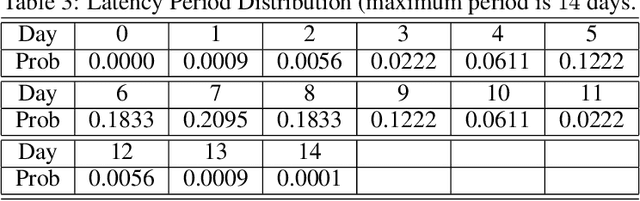

Abstract:Many countries have experienced at least two waves of the COVID-19 pandemic. The second wave is far more dangerous as distinct strains appear more harmful to human health, but it stems from the complacency about the first wave. This paper introduces a parsimonious yet representative stochastic epidemic model that simulates the uncertain spread of the disease regardless of the latency and recovery time distributions. We also propose a Markov decision process to seek an optimal trade-off between the usage of the healthcare system and the economic costs of an epidemic. We apply the model to COVID-19 data from New Delhi, India and simulate the epidemic spread with different policy review times. The results show that the optimal policy acts swiftly to curb the epidemic in the first wave, thus avoiding the collapse of the healthcare system and the future costs of posterior outbreaks. An analysis of the recent collapse of the healthcare system of India during the second COVID-19 wave suggests that many lives could have been preserved if swift mitigation was promoted after the first wave.

What are the visual features underlying human versus machine vision?

Nov 07, 2017

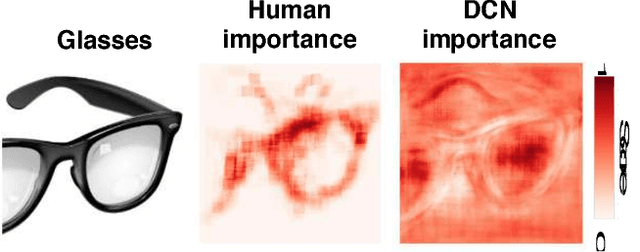

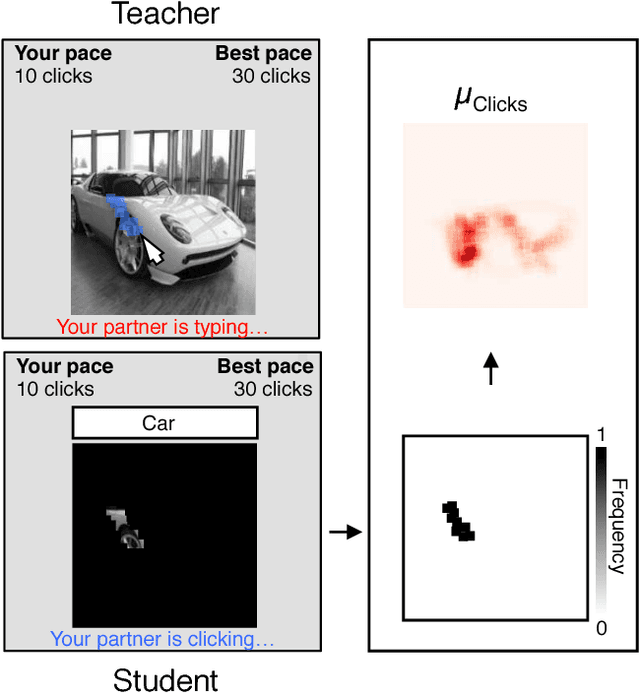

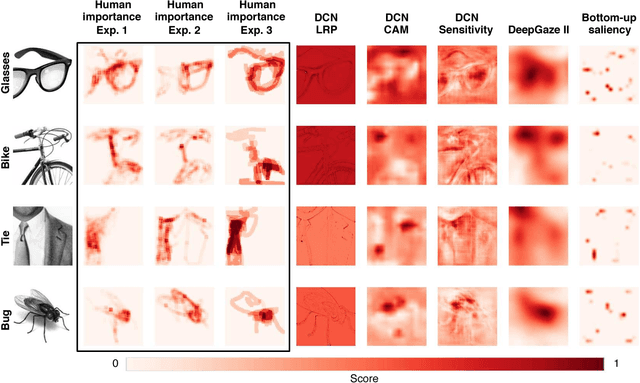

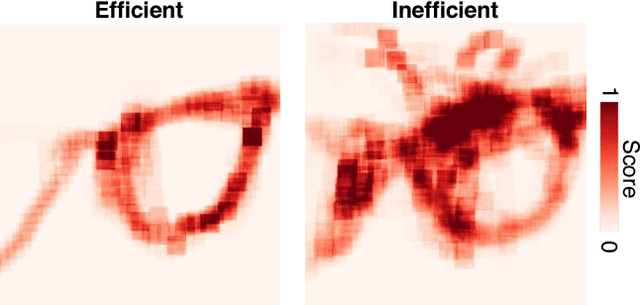

Abstract:Although Deep Convolutional Networks (DCNs) are approaching the accuracy of human observers at object recognition, it is unknown whether they leverage similar visual representations to achieve this performance. To address this, we introduce Clicktionary, a web-based game for identifying visual features used by human observers during object recognition. Importance maps derived from the game are consistent across participants and uncorrelated with image saliency measures. These results suggest that Clicktionary identifies image regions that are meaningful and diagnostic for object recognition but different than those driving eye movements. Surprisingly, Clicktionary importance maps are only weakly correlated with relevance maps derived from DCNs trained for object recognition. Our study demonstrates that the narrowing gap between the object recognition accuracy of human observers and DCNs obscures distinct visual strategies used by each to achieve this performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge